Living user story documentation

I’m not sure if living user story documentation is something anyone else does, but I want to talk about why it evolved in my agency, the benefits we saw, and how we implemented it.

Some caveats:

- This is pretty specific to client services work, and maybe not so much product or SaaS work (but you still may find it beneficial)!

- I know documentation can be controversial, and that the Agile manifesto implores “Working software over comprehensive documentation” - but after running a software agency for 8 years, I can confidently say that writing functional documentation (how things behave, not necessarily how they work behind the scenes) contributed greatly to the success of all of our projects, and had a large positive impact on the relationships with our clients.

- I also know that on-going living documentation using user stories is probably controversial also, as the original goal of user stories (in agile-land) is to be a “placeholder for a conversation”, and not capture all the detail of every requirement etc., but I can only talk about what worked for me.

Quick overview of User stories (and friends) 📚

Note: Skip this section if you’re familiar with user stories and acceptance criteria.

One of the best things to come out of agile development is the structured approach to describing a system, and making it a collaborative practise alongside your client and other stakeholders.

When mapping features of the system with my clients, I start with getting them to identify all the users that interact with the system, e.g.

- Car Buyer

- Car Seller

- Site Administrator

After that we identity a few specific personas for each of those users, these are fictional characters that will help gain a better understanding of who’s involved (the client generally knows their end users so are best to identify these), e.g.

Persona for Car Buyer

- Name: Jeff Roberts

- Age: 38

- Gender: Male

- Location: Melbourne, Australia

- Work: Accountant

- Motivations / Fears / Frustrations etc.

We then create user stories which represent the high level features of the system. In my agency, user stories formed the basis of our system scoping. User stories are written in the format of “As a <user>, I want <goal> So <reason>, e.g.

As a Car Seller,

I want to signup to create an account with CarFinders,

So I can browse and search for

Whilst a user story is a high level intro, the acceptance criteria for each user story actually are where yourself and your client agree what needs to be implemented

- The signup form has the fields name, email, password, confirm password etc

- Name must be less than 256 characters

- Password and confirm password must be the same value etc..

If you’ve never worked with these before there is heaps more info out there on the net, and it’s a good framework of collaboratively capturing the scope of the project.

How I used to leverage User stories 📕

In a project workshop I would pop open a new Google doc, and work with the client to identify the users involved in the system. We’d then create a couple of personas for each user, and then start to capture the user stories for each user. We would then breakdown the user stories into high level acceptance criteria. That would be the initial step, and after the project workshop I would review what we captured, ask the client a further bunch of questions, and usually update/finesse acceptance criteria based on those responses.

At this point, with the user stories and the other outcomes of the project workshop, I had enough data for a high level project estimate, which I would bundle in with detailed project proposal and send off to the client.

Assuming the client then chose my agency for the project, when it was nearing the scheduled start date I would pull up the Google doc with the user stories, create a project in JIRA, then create tasks (of type “Story”) for each user story previously identified. I would copy the user story and acceptance criteria into the “description” of the task, and put all the tasks in the backlog (we’d also make some non-functional tasks here such as server setup, deployment setup, security testing etc). I would then work with the client to identify what sprint 1 should look like, and when then go over each task again and confirm/refine the acceptance criteria. This would repeat for each sprint.

Development would kickoff, and the tasks would be assigned to developers for each sprint.

As with all software projects, when the client started seeing they project materialise they would add a few features (or a lot of features!), and some changes - no worries, these were created as new tasks in the backlog. Comments where left on the JIRA tasks to help with testing notes, or client feedback, but we didn’t bother changing the user stories in the original Google doc, we just kept it all in JIRA as that turned into the source of truth when we copied the user stories over.

Let’s assume the worlds a perfect place, and after 4 months of hard work the project was delivered on time, on budget, and just as the client expected. Nailed it.

Where the cracks start to form 😬

The project was a success, and a few months after go-live the client had some more cash, and a huge list of new features and changes they wanted to implement. Awesome, recurring business is exactly what we want as a software agency.

Now right away I know that those initial user stories that were written many months ago are stale, because as soon as new tasks where added directly in JIRA and no longer in the initial Google doc, then things became out-of-sync. Similarly those old JIRA tasks with the comments will not be all re-read and referenced, as once a Sprint is set to “finished” who knows where those tasks go 🙂 But that’s not the end of the world, as the initial user stories are not really documentation so-to-speak, they were more a temporary tool to get a job done. And that’s fine, we can simply create a new Google doc, and create new user stories for the new features.

What does become a bit tricky is describing changes to existing user stories (that were already implemented) is a little more annoying as we don’t have the source of truth anymore, but we will do our best, maybe something like “As a User, I want to see a new CAPTCHA field on signup, So I can prove i’m not a robot”.

Because you build amazing software, your client’s customers are happy, your keeps coming back - great work. Maybe they even commit to an ongoing retainer, where you’re making updates to their system every month or so. A gold mine for a service business.

But as the system grows and grows, at some point you realise there are so many features and nuances to the system, that it’s just too big for a single person to keep it all in their head. Both your team and the clients team start to forget about adverse effects a change may have, and bugs start to slip in more and more (e.g. no one remembered that changing the foobar widget would affect the automated nightly email job). And while a full automated test suite can help with these types of errors, not understanding a system still affects things like estimates (e.g. we thought it was a day in sprint planning, but unfortunately no one remembered X,Y,Z and now its more like a week).

Your developers also need to work across multiple projects, so unfortunately the ones that worked on the last sprint are not always available to work on the next sprint. This is common, but on a complex system it can lead to unnecessary bugs (e.g. Michael was unaware that 3 sprints ago we introduced user customisable currencies, so whilst fixing a bug in the payment reminder email, he actually introduced another one, as he assumed it was still all in US dollars).

You get the point, more bugs, quality slips, developers find it harder working on the system. But let’s say because you still do great work, and the client is still happy - and sticks with you for many years.

For the first 4 years of my agency, that was what it looked like.

We made people happy, and the better we got as a business, the larger projects we won. And the larger, longer running projects we won, the more projects we would have to run in parallel. The more parallel projects, the more developers moved around, the more knowledge got lost, the more user stories grew stale. Some developers became so integral due to the knowledge they had accrued, that the thought of them leaving could keep my up at night. I knew this was a red flag, and something I had always wanted to avoid - as previously as a programmer I HATED the feeling of being trapped on a project. Good devs leave good jobs for reasons like that.

What’s wrong with comprehensive documentation? 📜

Whilst I was never an agile purist, I knew that “comprehensive documentation” was deemed bad, a way of the past, and stank of that term that should not be mentioned… waterfall development.

But I think the historical issue with comprehensive documentation is more to do with a 250 page bible of upfront instructions that was put together by an entire floor of people in suits, who never actually work alongside the client to ensure it met their true needs. The documentation problem was convoluted UML diagrams created by architecture astronauts (in those same suits!) and then sent over to the developers on the floor below to implement them verbatim. All that plus that original 250 page bible was stale by 4pm of the first day of development, and then the business analysts were working on other new projects, so no one kept it up to date.

Stale comprehensive documentation is worse than no documentation at all, because you trust, and it lies to you. It is a gigantic waste of everyone’s time.

However I believe comprehensive documentation that is living and trustworthy, and describes the functionality but not how to implement it - can be a fantastic asset.

Mending the cracks 🛠

I had known about living documentation for a long time, and having worked with in previous agile companies was familiar with concepts such as BDD (Behaviour Driven Development), and tools such as Cucumber Studio, which can take your documentation about specific features (written in individual text files a specific syntax called Gherkin), and actually execute these as software tests, We initially tried this on a couple of projects, and whilst we liked parts of it, I found it cumbersome to write in the specific syntax, and clients also found it hard to read and sign-off the requirements. My developers also found it more of barrier to keep updated (which completely defeats the purpose), so after looking for alternatives, we realised there isn’t an off-the-shelf solution that can fix this multi faceted problem.

So after some trial-and-error, we ultimately came up with a simple(ish) solution that meet our needs well:

- Writing the user stories in one central place, including the story and the acceptance criteria (we used Confluence, but any similar wiki should be fine)

- Each user story has it’s own unique URL, so you can easily link back to the feature (and never copy-and-paste its contents)

- Version control, so you can always peek back in time to see the evolution of a story or acceptance criteria

- Revising the stories in one central place as changes are agreed upon with the client

- Putting a process in place to ensure #4 above

- Be able to clearly show “future state” changes in the user story and acceptance criteria, vs what is currently in production

- Be able to link user stories via acceptance criteria, allowing you to build a web that can be browsed, as opposed to being forced to read each story sequentially.

So taking you through an example of the improved method leveraging user stories as living documentation...

In a project workshop I would pop open Confluence and navigate to the projects space I had already setup. As we identify each user story, I would create a new page under the top-level “User Stories” page. (I had a specific user story template I created to help with this). The user story page would have a title (e.g. “Login”), and user story, and then under that the bullet points of acceptance criteria.

When creating the JIRA task I would no longer just copy the story contents, but simply add the link to the specific user story Confluence page as the description of the JIRA task. Whilst this did add a little overhead to get to the guts of the story from JIRA (adds 1 click), the benefits of centralising the story far outweighed it, and my team and clients got used to it quickly.

In terms of the technical implementation details, I would add that in the same description field of the task as a bullet point list under a heading called "Technical details". I would often do the first iteration of the technical notes, and I would run through with my team in the internal sprint kickoff, and usually flesh the technical details out with more information.

When any changes were required to existing features, the existing user story was updated, and the specific changes were highlighted in a unique colour (our team and clients understood colours in stories and acceptance criteria indicated a change to existing functionality). A new JIRA task would be created for each of those changes but in the description of the task it not only had the URL to the user stories Confluence page, but also the specific colour the change related to. E.g.

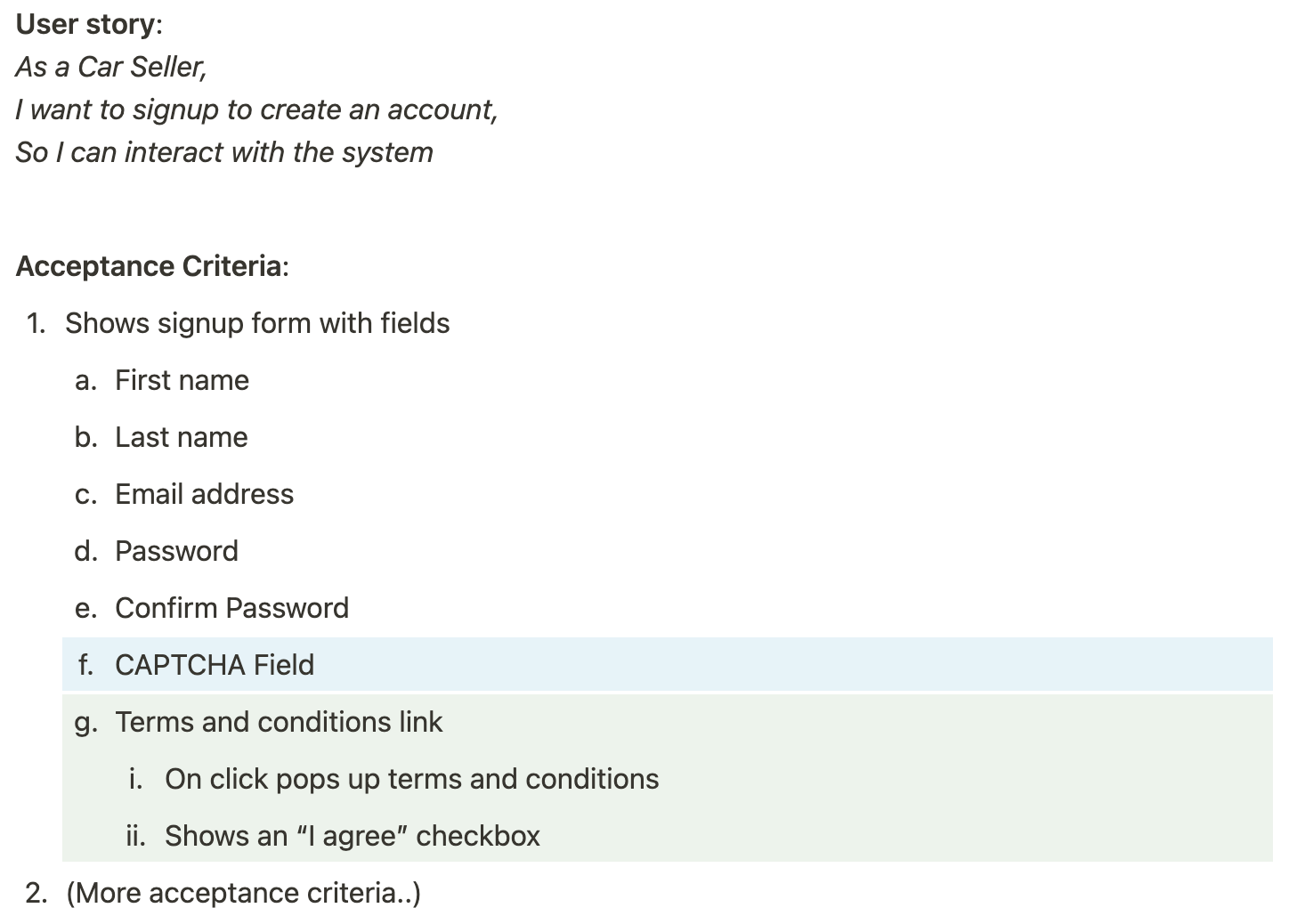

Page for user story “Signup”

This represents two new changes, a new CAPTCHA field, and a new T&C’s link. There is no meaning to the specific colours, other than each colour will be implemented as a single JIRA task. Using the colours helped us differentiate changes visually, whilst still keeping the entire features as a single story.

So from this example, two new tasks would be created in JIRA, both had a description that linked to this user stories page, however one also mentioned “see blue highlight” and the other “see green highlight”.

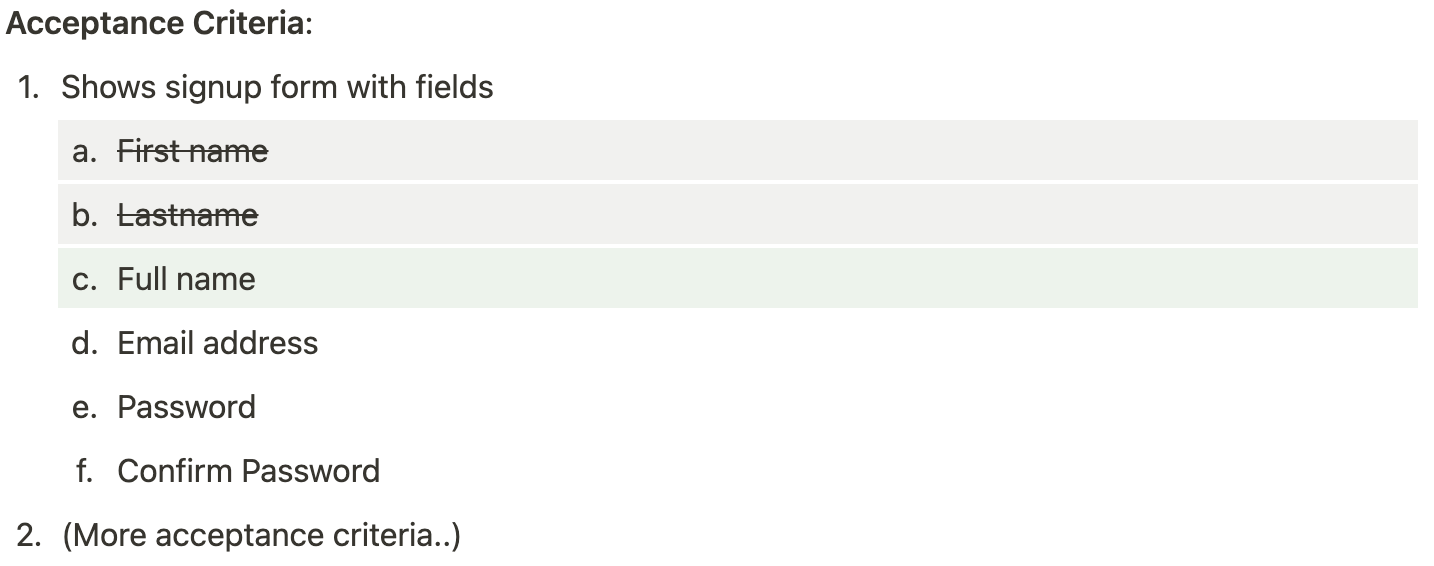

Similarly, for deleting functionality we would strikethrough with a grey background. E.g.

This represents the First name and Last name field are to be removed, and the new Full name field to be added.

Once the sprint was released, we then cleared all colours and strikethrough from the stories (taking care not to remove any that are actually still in development in an ongoing sprint).

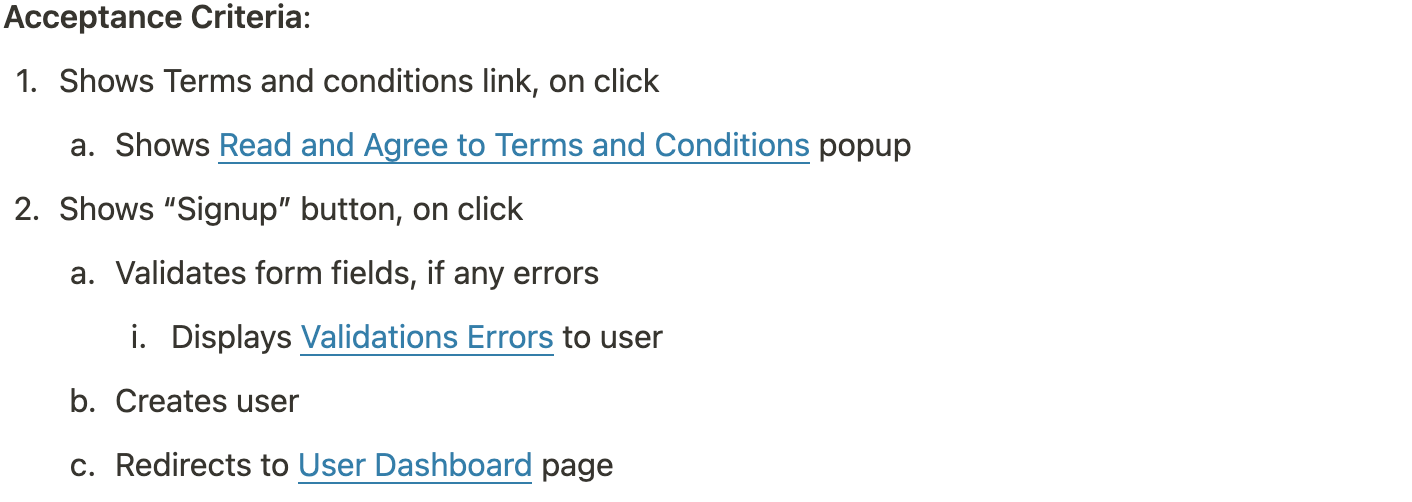

Another strength that evolved, was linking to other user stories in the acceptance criteria, e.g.

These are just made up examples, but the blue underlined test represents links to other user stories in the system. It can allow you to create more self contained stories (e.g. Read and Agree to Terms and Conditions) or reuse stories that are shared across many features (e.g. Displays Validations Errors to user).

Linking turned a user story into a quasi-interactive map of the system, and turned out to be very powerful in keeping user stories maintainable.

As I mentioned, making sure documentation is kept up to date is the hardest nut to crack, but as soon as we had one central location for a user story it became easier. The process looked like this...

- Client requests changes (via phone, email, JIRA, whatever)

- The exact changes/requirements are confirmed (again via whatever medium, ideally JIRA comments)

- The changes are then written into the existing user story (or stories), and highlighted to emphases changes from existing functionality.

- New JIRA tasks are created for these changes (referencing the link to the User story and the corresponding change colour this task relates to)

- The client is then asked to review these changes - meaning the client needs to re-read each affected user story (which gives them the whole context), and confirm that the new requirements are highlighted

- When the change is confirmed, this becomes the source of truth for everyone involved (though development and testing).

- Once a release is deployed, and changes highlighted in the stories in that release are then unhighlighted (meaning they are now live, and part of the system).

Once the documentation was living (and reliable), we found the same user stories got referenced at many touch points..

- The project workshop - user stories were created

- Refinement before estimation - user stories were tweaked and detail added

- Client confirmation - user stories were sent to them in the proposal

- Client kickoff workshops - all user stories were ran through with the client (again!)

- Internal team kickoff - all user stories were ran through with the developers (would often bring up items to confirm with client)

- Sprint kickoff - sprint users stories were ran through with client and devs

- Development - devs would reference the story to develop against

- Dev testing - devs would manually test the acceptance criteria of other devs features

- Quality Assurance Testing - internal tester would manually test all acceptance criteria

- User acceptance testing - client would be given a UAT document that lists all stories in the sprint, and would manually test all acceptance criteria

- Release notes to client - user stories (and links to the pages) were circulated to client to confirm what had just been released

- System changes and new features - user stories would be updated and confirmed

- Estimates - user stories would be integral for checking possible impacts or affects of the change

- New developers - user stories would be an integral part of them understanding the system

- New Client staff - often clients would give the same user stories to their own staff

The amount of touch points the information had showed us just how valuable it was, and whilst it takes a small bit of time up front, it payed huge dividends longterm.

What living user story documentation solved 🏅

There were definitely times that keeping the user stories and acceptance criteria up to date seemed cumbersome, but the proof was clear, our bug count when down, our developers were happier, and our clients loved it. Other benefits we found

- A new sprint was no longer as risky as it was once was, even if it was many months after the previous release.

- Developers could switch between projects easier, as people no longer had knowledge silo’d in their heads

- Changes to features were now part of the core documentation, meaning context was available at all times

- Clients embraced having access to the living user stories, and often used it as the documentation that they themselves didn’t have

For some projects that spanned many years, the living user story documentation became such an asset I feel it really strengthened our client relationships. And as a sales piece, it was easy to quickly show potential clients the amount of detail we would put into (and keep) about their projects - it’s like they got a detailed manual for free (our user stories were often integral for the creation of actual manuals for the clients users too).

Naturally bugs in the user stories themselves can still happen, either misunderstandings or unclear requirements, however we had multiple touch points to catch these, it’s easier to fix than code. If writing functionality in “user story” style isn’t your thing, thats fine. The real learnings here are more around living documentation, and the benefits that can have for your business and your clients business.